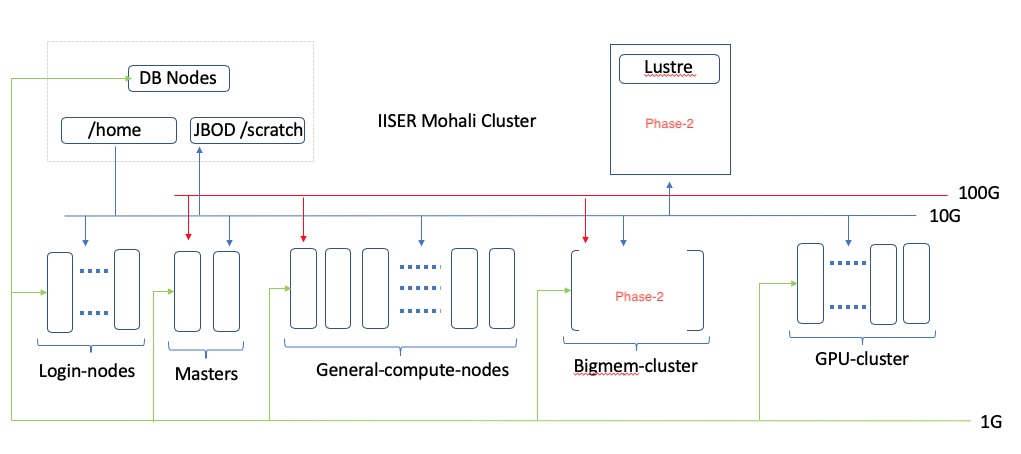

The High-Performance Computing (HPC) facility at IISER Mohali is a Heterogenous High-Performance Computing solution that has been tailored to meet the computing requirements of various research groups at IISER Mohali while keeping ample options for future upgrades.

The salient features of HPC:

-

The HPC facility plays a pivotal role in supporting the computational needs of researchers, faculty, and students across various departments & disciplines.

-

The HPC facility fosters collaboration among researchers and data scientists within the IISER Mohali Community by providing an environment conducive to sharing knowledge, resources, and expertise to tackle complex scientific problems and perform large-scale simulations/modeling.

-

The nature of necessary or preferred computing environments (CPU-based, GPU-based, CPU+GPU-based, Node- and Multi-Node Parallelism exploited by the computer programs, Data/Memory Intensive nature of the research problems) varies across research groups.

-

Modular Design to accommodate any number of computing nodes even a completely new cluster(s) in the future.

This solution has been designed to ensure high availability, modularity, and scalability of its components. It is emphasized that the design involves more than the purchase of a high-availability computer cluster. This heterogeneity makes the design more complex and technically challenging to cater to the needs of users across the departments. Therefore, a heterogeneous-HPC system consisting of several logical components has been envisaged to implement in several phases. It is a system when implemented fully, will be able to support and sustain the computing needs of research groups across the disciplines of IISER Mohali.

Considering the above requirements, IISER Mohali has recently augmented its HPC resources and successfully completed the Phase-I commissioning of the facility.

The HPC facility is equipped with cutting-edge hardware and software technologies to cater to the diverse computational needs of its faculty, researchers, and students. Here's an overview of the facility:

-

Hardware: The HPC facility is equipped with a powerful cluster of computing 36 CPU-only nodes, each comprising high-performance CPUs, 2 x Intel(R) Xeon(R) Gold 6230R CPU @ 2.10GHz (26c), fast-access memory, and local scratch. These nodes are interconnected through high-speed 10G-Base-T networks, and low-latency 100G FDR InfiniBand networks, enabling efficient parallel processing and data sharing among the computing resources.

-

Hardware Accelerators: The facility incorporates specialized hardware accelerators, 12 Nvidia Tesla T4 GPUs (320 Turing Tensor Cores, 2560 CUDA Cores, 130 TOPS of INT8, and 16 GB GDDR6 300 GB/sec GPU Memory), distributed in 3 host nodes with 2 x Intel(R) Xeon(R) Gold 6230R CPU @ 2.10GHz (26c), large memory and highspeed local disks to handle ML/AI-related workloads. Each GPU is capable of 8.1 TFLOPS single precision calculation. These accelerators enable researchers to accelerate complex simulations, data analysis, and machine learning tasks.

-

Scalability and Performance: The computing cluster is designed for scalability, allowing easy expansion of computing resources to accommodate the growing demands of computational research in the future.

-

Software Stack: The HPC facility at IISER Mohali provides a comprehensive software stack tailored to meet the diverse needs of the research community. It includes a range of scientific computing libraries, parallel processing tools, and popular programming languages such as Python, C/C++, and Fortran. Additionally, it supports a wide range of scientific software packages and modeling tools such as ESPResSO, VASP, LAMMPS, SIESTA, CPMD, CP2K, ABNIT, NAMD, GROMACS, AMBER, Gaussian, Molpro, MATLAB, Mathematica, ANSYS etc.,).

-

Data Storage and Management: To support data-intensive research, the facility features a high-capacity 200TB JBOD as persistent storage for reliable access to large datasets, 30TB of global scratch, 7TB of local scratch, and high-speed storage systems (20TB flash storage) that seamlessly host all user data. Researchers can store and analyze their data securely within the HPC infrastructure. The entire system is managed and controlled by a dedicated master node, the database servers, and load balancers with all open source based software.

|

|

|

Since its commissioning, HPC has been utilized by several user access departments and it is running at full capacity. Some of the ongoing activities include computational fluid dynamics (CFD), machine learning and artificial intelligence (ML/AI), Astro-dynamics, bio-molecular simulations, computational chemistry, computation biology, environmental and weather modeling simulations, and several other areas of science and technology. The HPC is established as a central facility coordinated by Dr. Satyajit Jena, the Convenor of the facility, in consultation with the Faculty In-charge the Computer Centre, the Dean of R&D, Heads of all departments, and the Director of the institute.

The details of HPC can be found in the intranet by clicking here (This is for internal Use Only)